Evaluating and Selecting AI Tools

The rapid explosion of the global AI software market-projected to exceed $300 billion by 2026-has made selecting the right tool overwhelming. Organizations risk investing in solutions that don't scale, fail to integrate, or overlook crucial compliance requirements as they are inundated with new research, tools, and startups.

In this article, we explore a high-level due diligence framework for users and organizations to consider.

Why Rigorous Evaluation is Non-Negotiable

AI systems introduce unique risks, including algorithmic biases, data security vulnerabilities, and complex compliance issues. Skipping thorough evaluation can lead to all of the headline failures that are being published, like the statistic that over 80% of AI and Machine Learning (ML) projects never advance beyond the proof-of-concept (PoC) stage and 70% of organizations that fail to define clear AI use cases and success criteria end up with underperforming projects.

A concerning 78% of organizations utilize third-party AI tools, and more than half of all AI failures originate from these external tools. Inadequate data governance or security issues also account for 60% of AI project failures, even though most organizations prioritize data security.

Evaluation ensures that you understand the technology’s capabilities, limitations, and ethical implications, so you can prevent becoming one of these statistics.

The Core Components of AI Tool Evaluation

When conducting due diligence, it is essential to evaluate multiple dimensions based on each specific use case you have in mind. This might be by domain, or by task eventually and require custom benchmarking, but there are also some core meta qualities to look for.

1. Technical Capabilities and Scalability

A thorough technical assessment should go beyond mere functionality and look at the model from a high-level perspective and how it was architected and developed.

First, evaluate the underlying algorithms for effectiveness, scalability, and ability to adapt to new data. Assess the data sources, integrity, and how the data is collected and cleaned, since AI system performance hinges heavily on data quality.

While accuracy is important, never focus solely on it. Scalability evaluation demands metrics related to latency, throughput, and resource utilization (CPU, GPU, memory). This is especially important for thinking about moving your use case to production. Each use case will have different technical requirements.

Finally, ensure the system is protected against cyberattacks and can operate consistently and accurately under expected conditions, adapting to changing environments and adversarial inputs. Look for guardrails, safety benchmarks and how the model was regularized and normalized to ensure robustness against attacks and exploitation.

2. Legal, Ethical, and Fairness Review

Responsible AI is ensures that technology aligns with institutional values and legal standards, which is important for most life sciences use cases.

For commercial models, verify the AI system adheres to critical data protection laws like GDPR, HIPAA, or CCPA. If you are using an open source model in your own environment, then more of the burden is on your organization to manage this end-to-end.

Investigate if the AI system was trained on biased data (e.g., gender, race) and confirm that ongoing bias monitoring systems are in place to identify and mitigate these issues. Many models will not disclose this, so it is good to check benchmarks or develop your own.

It is also important to check for explainability features, whether they are included or not. If not, it is important for the organization using the system to develop robust human-in-the-loop systems to account for this, or explainability features of their own as in LLM Ops best practices.

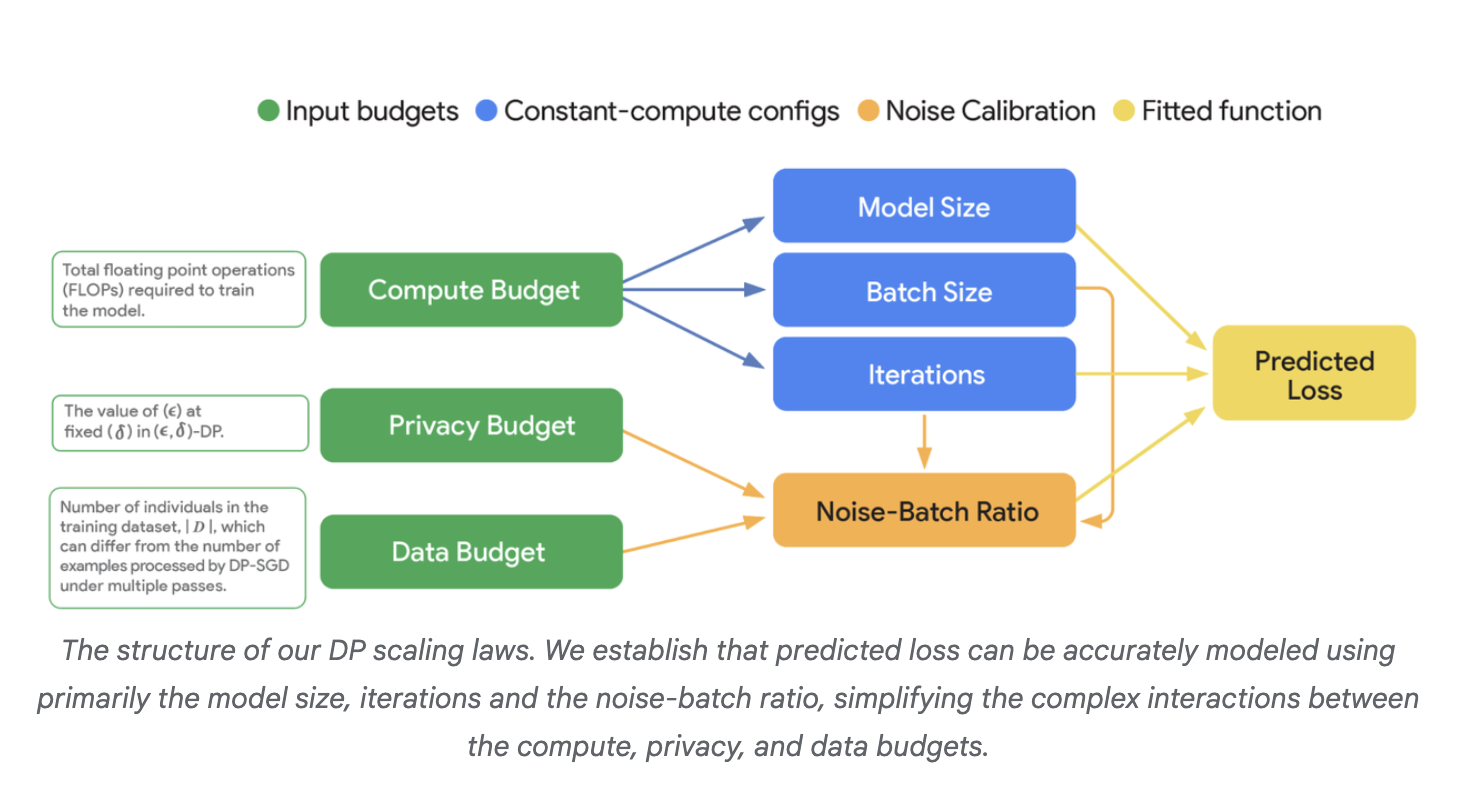

If personal data is involved, ensure privacy-enhancing techniques (PETs) like data anonymization, de-identification, or differential privacy are implemented. There are newer differential privacy-first trained models now.

3. Integration, Usability, and Vendor Support

A sophisticated AI tool is only valuable if your team can use it effectively and integrate it seamlessly.

Assess compatibility with your existing systems, such as CRM, ERP, or data warehouses. Well-documented APIs and SDKs are a plus. Look for user-friendly interfaces and comprehensive training resources to minimize the learning curve.

The success of AI often relies on the team behind it. Evaluate the vendor’s reputation, 24/7 technical support availability, and responsiveness. For third-party tools, ask for commitment on tight SLAs for security vulnerability remediation and system availability.

A Structured Approach: How to Evaluate AI Tools

The NIST AI Risk Management Framework (AI RMF 1.0) outlines four core functions which offers a nice reference for evaluating tools.

Step 1: Define Objectives and Map Context (MAP Function)

Clearly identify the specific problem you want the AI tool to solve, which could be customer service automation, data analytics, or scientific research. This clarity is the foundation for smart AI investment, as organizations with well-defined use cases are 30 to 40% more likely to achieve successful deployments.

The MAP function establishes the context, including the intended purpose, potential risks, laws, norms, and the target audience.

Step 2: Build vs. Buy Decision

A crucial strategic step is determining whether to develop the AI solution internally or adopt an external tool. Columbia University outlines structured criteria for this decision, running from consuming off-the-shelf apps to building custom models from scratch:

Consume

Use off-the-shelf GenAI applications (e.g., Microsoft Copilot, ChatGPT Pro).

Immediate value with minimal setup

Embed

Integrate GenAI APIs into existing applications (e.g., OpenAI API, Anthropic Claude API).

Integrating AI into existing apps

Extend

Augment GenAI via Retrieval-Augmented Generation (RAG) using institutional knowledge.

Needs to answer from institutional knowledge

Customize

Fine-tune GenAI models for domain-specific language, tone, or format (e.g., OpenAI fine-tuning).

Need for domain-specific tone/formats

Build

Develop custom models from scratch.

Need full control over architecture, data, and weight

Step 3: Measure and Score Potential Tools (MEASURE Function)

Once potential tools are shortlisted, objective comparison is necessary. A weighted scoring framework helps ensure consistency and alignment with institutional priorities. Criteria to consider:

Performance

Speed, scalability, and state-of-the-art accuracy under real-world workloads.

Weight example: 25%

Cost

Licensing, usage, infrastructure costs, and pricing transparency.

Weight: 20%

Compliance & Security

Support for regulatory/security standards (e.g., FERPA, HIPAA, GDPR).

Weight: 15%

Integration & Tooling

Compatibility with existing systems, APIs, SDKs, and documentation.

Weight: 15%

Customization

Ability to fine-tune or configure for domain-specific use.

Weight: 15%

Community & Support

Documentation quality, vendor responsiveness, and community activity.

Weight: 10%

Step 4: Validate Performance with a Pilot Program (MANAGE Function)

Before committing to a full deployment, run a pilot program. Pilot projects allow the team to test usability, accuracy, and integration in a controlled environment. Organizations that conduct AI pilots report higher satisfaction and 40% fewer implementation issues.

Leverage Existing Frameworks and Tools

There are a lot of frameworks to look at and reference or tailor for your own needs.

NIST AI RMF 1.0 is a voluntary framework guides the responsible development and use of AI systems. It emphasizes trustworthiness characteristics such as Valid & Reliable, Safe, Secure & Resilient, Accountable & Transparent, Explainable & Interpretable, Privacy-Enhanced, and Fair.

AI tools themselves can also be used to streamline the due diligence process, automating tasks like document review, data analysis, and risk identification. Natural Language Processing (NLP) models, for instance, can quickly scan contracts for compliance risks.

Popular open-source tools for development and evaluation include TensorFlow, PyTorch, Hugging Face Transformers, and Apache Spark. For model monitoring, tools like MLflow, Neptune.ai, and Comet ML help track performance and identify model drift (decay due to changing data patterns or real-world conditions).

By adopting a structured evaluation process rooted in due diligence, organizations can mitigate significant risks, ensure compliance, and effectively leverage AI technologies to gain a competitive edge.