Top AI Research Trends from NeurIPS 2024

NeurIPS (Neural Information Processing Systems) is a leading conference in artificial intelligence research. NeurIPS 2024, held in Vancouver, Canada, showcased cutting-edge advancements across various AI domains. This year's conference was the largest, with over 4,000 papers accepted, reflecting the growing interest and rapid advancements in AI research. This article delves into the top research trends that emerged from the conference, providing insights into the future of AI.

Advancements in Large Language Models (LLMs)

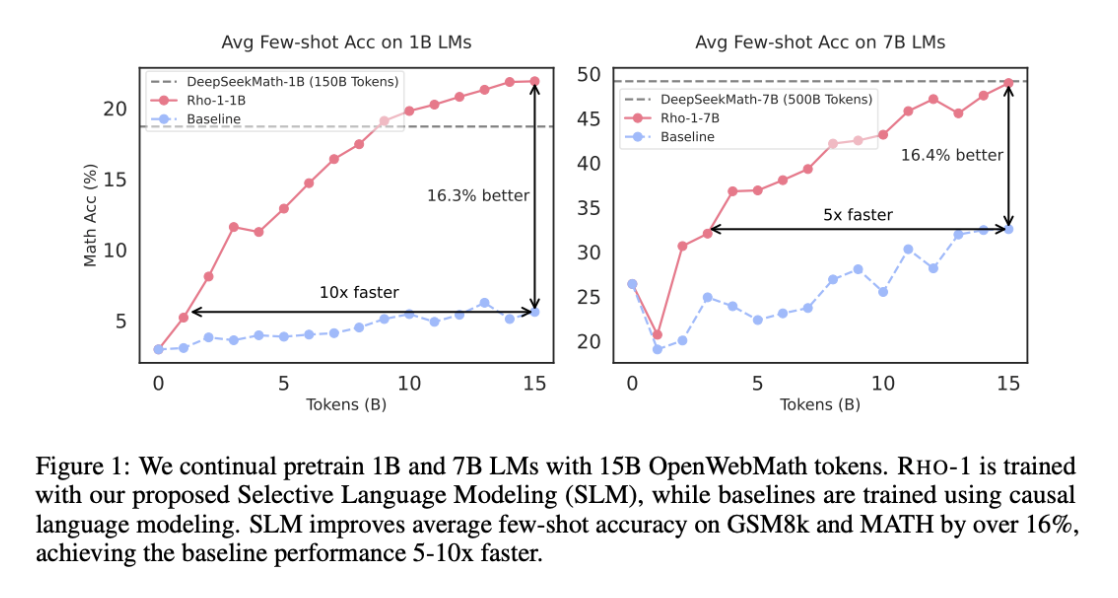

LLMs continued to be a focal point at NeurIPS 2024. Researchers presented novel techniques for improving LLM performance, efficiency, and safety. One notable trend was the exploration of selective token prediction for pre-training. The paper "Not All Tokens Are What You Need for Pretraining" introduced the Rho-1 language model, which focuses on predicting only the most informative tokens during training. This approach, called Selective Language Modeling (SLM), involves scoring tokens using a reference model and training the language model with a focused loss on tokens with higher scores. By selectively training on useful tokens, Rho-1 boosts accuracy and general performance. For example, when continually pre-trained on 15B OpenWebMath corpus, Rho-1 yields an absolute improvement in few-shot accuracy of up to 30% in 9 math tasks. This demonstrates the potential for more data-efficient LLM training.

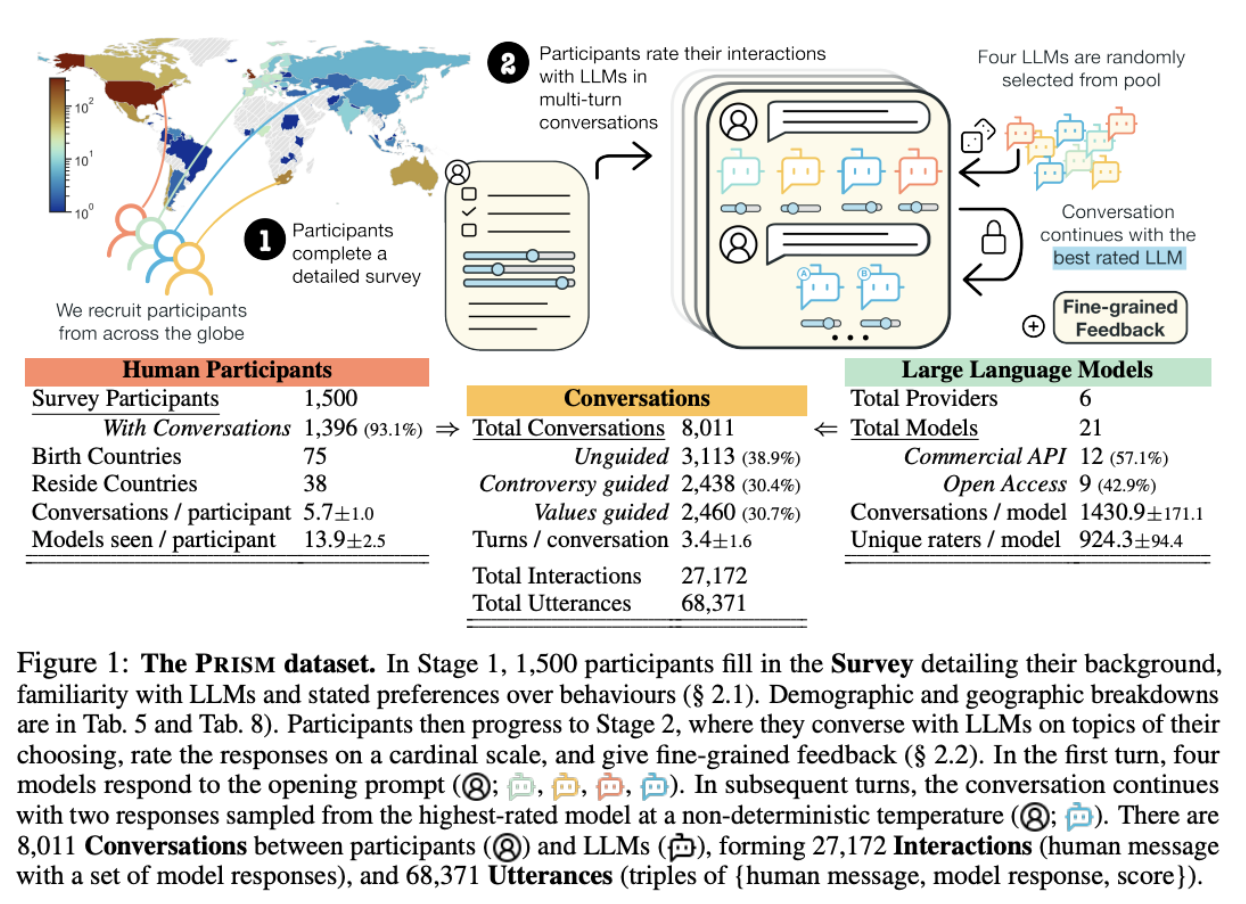

Another key area of focus was LLM alignment, which refers to the process of ensuring that LLMs behave in accordance with human values and intentions. This is crucial for building safe and trustworthy AI systems. The award for the best paper in the Datasets & Benchmarks track went to "The PRISM Alignment Dataset: What Participatory, Representative and Individualised Human Feedback Reveals About the Subjective and Multicultural Alignment of Large Language Models". This paper introduced PRISM, a new dataset that maps the sociodemographics and stated preferences of 1,500 diverse participants from 75 countries to their contextual preferences and fine-grained feedback in 8,011 live conversations with 21 LLMs. PRISM targets subjective and multicultural perspectives on value-laden and controversial issues, where interpersonal and cross-cultural disagreement is expected. This dataset provides a valuable resource for researchers studying LLM alignment and developing methods to mitigate biases.

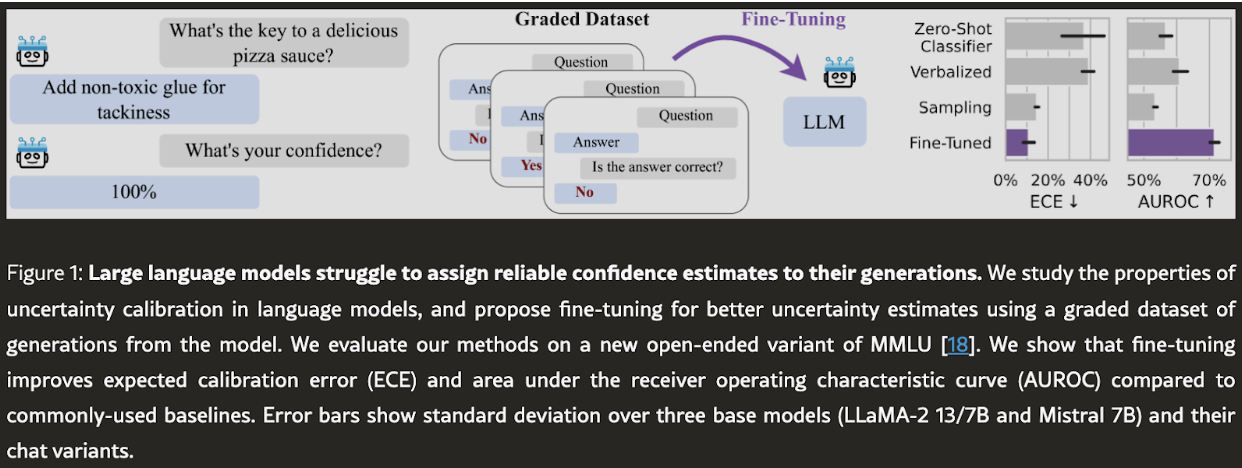

Furthermore, researchers explored methods to improve the factuality and reliability of LLMs. The paper "Large Language Models Must Be Taught to Know What They Don't Know" demonstrated that fine-tuning LLMs on a small dataset of correct and incorrect answers can significantly improve their ability to estimate uncertainty. This approach involves training an uncertainty estimator by fine-tuning the model on a small dataset of graded examples.The researchers found that a thousand graded examples were sufficient to outperform baseline methods. This is crucial for building trust in LLMs and ensuring their responsible deployment in real-world applications, as reliable uncertainty estimation in LLMs is crucial for applications where accuracy and trustworthiness are paramount, such as medical diagnosis, legal advice, and financial forecasting. By knowing when an LLM is uncertain, users can make more informed decisions and avoid relying on potentially inaccurate or misleading information. This can lead to better outcomes in high-stakes scenarios and increased trust in AI-powered systems.

Innovative Approaches to Image Generation

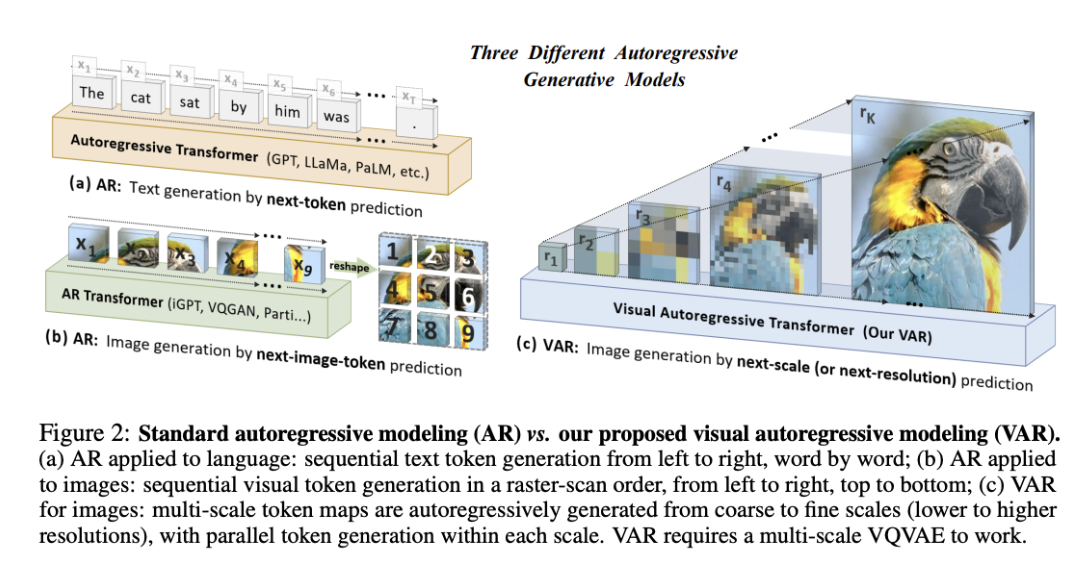

Although LLMs dominated much of the discussion, NeurIPS 2024 also witnessed exciting advancements in other areas of AI, including image generation. The "Best Paper in the Main Track" award was given to "Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction". This paper introduced a novel visual autoregressive (VAR) model that generates images by iteratively predicting them at increasingly higher resolutions. This approach, called "next-scale prediction" or "next-resolution prediction", diverges from the standard raster-scan "next-token prediction". VAR requires a multi-scale VQ-VAE to work, which involves quantizing a feature map into multiple token maps at increasingly higher resolutions. This approach outperformed existing autoregressive models in efficiency and achieved competitive results with diffusion-based methods, opening up new possibilities for scalable and high-quality image generation.

One of the key advantages of VAR is its ability to learn visual distributions quickly and generalize well. This is achieved by decomposing the probability of a sequence into the product of conditional probabilities, assuming unidirectional token dependency. By predicting the image at increasingly higher resolutions, VAR can capture the hierarchical structure of images and generate more realistic and detailed outputs. This approach has shown promising results on the ImageNet 256x256 benchmark, significantly improving FID and IS scores compared to existing autoregressive models. Moreover, VAR exhibits zero-shot generalization ability in downstream tasks like image in-painting, out-painting, and editing, further demonstrating its potential for various image generation applications.

Another interesting development in image generation was the exploration of optical diffusion models. These models leverage the properties of light propagation to implement denoising diffusion models, offering a potential solution for high-speed and energy-efficient image generation. By projecting noisy image patterns through passive diffractive optical layers, these models can generate images with minimal power consumption, taking advantage of the bandwidth and energy efficiency of optical information processing.

Advancements in image generation have far-reaching implications for various industries. In the entertainment industry, VAR can be used to create realistic and detailed characters, objects, and environments for video games and animated films. In design and advertising, it can generate high-quality product visualizations and marketing materials. The development of optical diffusion models could lead to new hardware for image generation, potentially enabling real-time, high-resolution image generation on devices with limited power consumption, such as mobile phones or augmented reality glasses.

Enhancing Neural Networks with Higher-Order Derivatives

Researchers also explored the use of higher-order derivatives to enhance the training and performance of neural networks. The paper "Stochastic Taylor Derivative Estimator: Efficient Amortization for Arbitrary Differential Operators" proposed a tractable approach to train neural networks using supervision that incorporates higher-order derivatives. This method efficiently performs arbitrary contraction of the derivative tensor of arbitrary order for multivariate functions by constructing the input tangents to univariate high-order AD. This is particularly relevant for applications like physics-informed neural networks (PINNs) and “world models,” where incorporating higher-order derivatives can lead to more accurate and robust models.

Traditional methods for optimizing neural networks with high-dimensional and high-order differential operators face significant computational challenges due to the scaling of the derivative tensor size with the dimension of the domain (d) and the computational graph's size with the number of operations (L) and the order of the derivative (k). STDE addresses these challenges by combining randomization techniques with high-order automatic differentiation. By carefully constructing input tangents, STDE enables efficient tensor contractions for multivariate functions, leading to significant speed and memory improvements for PINNs. In practical evaluations, STDE has demonstrated over 1000x speed improvement and 30x memory reduction compared to traditional methods, successfully solving 1-million-dimensional PDEs in 8 minutes on a single NVIDIA A100 GPU.

The ability to efficiently train neural networks with higher-order derivatives has significant implications for scientific computing, engineering, and other fields that rely on solving complex differential equations. For example, in fluid dynamics, STDE can be used to develop more accurate and efficient simulations of turbulent flows, leading to improved designs for aircraft, cars, robotics, and other vehicles. In finance, it can be used to model complex financial instruments and predict market movements with greater accuracy. In biomechanics and biophysics, STDE can be used to develop more accurate models of biological systems, such as the human heart or musculoskeletal system. These models can be used to simulate the effects of diseases, injuries, or treatments, leading to a better understanding of human physiology and improved healthcare interventions. This breakthrough opens up new possibilities for using higher-order differential operators in large-scale problems, potentially leading to more accurate and efficient solutions in various domains.

NeurIPS 2024 Test of Time Award

The "Test of Time" award at NeurIPS recognizes papers that have had a significant and lasting impact on the field of AI. This year, the awards went to two groundbreaking papers: "Generative Adversarial Nets" and "Sequence to Sequence Learning with Neural Networks". "Generative Adversarial Nets" introduced a framework where a generative model is pitted against an adversary: a discriminative model that learns to determine whether a sample is from the model distribution or the data distribution. This has been foundational for generative modeling, inspiring numerous research advances and applications. "Sequence to Sequence Learning with Neural Networks" introduced the encoder-decoder architecture, which uses one LSTM to read the input sequence and another LSTM to extract the output sequence from that vector. This has been instrumental in the development of today's foundation models and large language models.

These foundational papers have already had a significant impact on healthcare and life sciences. GANs are used in applications like drug discovery, medical image synthesis, and disease prediction. The encoder-decoder architecture is widely used in tasks like medical text summarization, protein structure prediction, and genomic sequence analysis. The continued recognition of these papers highlights their lasting importance and the ongoing influence they have on AI research and industries like healthcare and life sciences.

Conclusion

NeurIPS 2024 provided a glimpse into the future of AI, with advancements in LLMs, image generation, and Neural Networks with Higher-Order Derivatives taking center stage. The conference showcased the growing importance of responsible AI development, with a focus on LLM alignment, factuality, and data quality. These trends are likely to shape the AI landscape in the years to come, driving innovation and impacting various aspects of our lives. The emphasis on responsible AI development reflects a growing awareness of the ethical considerations surrounding AI and the need to ensure that AI systems are fair, reliable, and beneficial to society.

One of the research steps involved identifying the most cited papers. While specific citation counts for NeurIPS 2024 papers were not available during the conference, the "Test of Time" award winners represent highly influential papers in the field. This suggests a continued focus on building upon foundational research and pushing the boundaries of AI capabilities.

The trends observed at NeurIPS 2024 have significant implications for various industries and aspects of society. Advancements in LLMs could revolutionize how we interact with computers and access information, while progress in image generation could transform creative fields and lead to new forms of artistic expression. However, it's essential to address the ethical considerations surrounding these developments, such as the potential for job displacement, privacy concerns, and the misuse of AI. By promoting open discussions about the societal impact of AI and sharing scientific research, we can harness the innovations in AI for all.