AI Confidence Metrics and Their Interpretation in Protein Structure Prediction

An essential aspect of utilizing AI predictions effectively lies in understanding the confidence metrics that accompany predicted structures. These metrics provide quantitative estimates of prediction reliability at the residue and domain levels, guiding researchers in model interpretation and experimental planning. This primer delves into the nature of these confidence measures, their strengths, limitations, and best practices for their critical evaluation.

Confidence Metrics in AI Predictions

Confidence metrics serve as vital tools that help structural biologists differentiate between reliable and uncertain regions within AI-predicted models. Without these metrics, users risk misinterpreting predictions, potentially leading to erroneous biological conclusions.

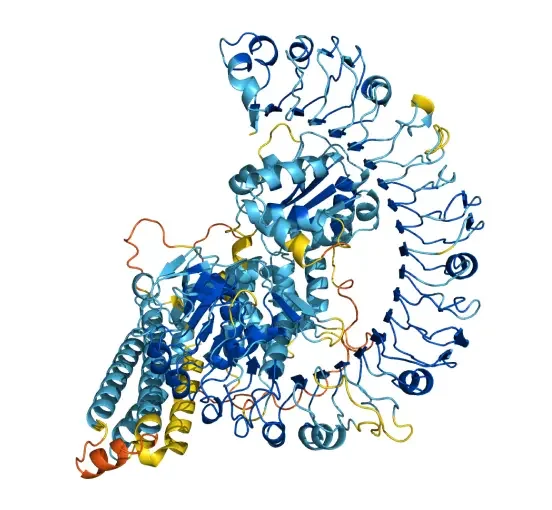

AlphaFold2 introduced the Predicted Local Distance Difference Test (pLDDT), a per-residue score ranging from 0 to 100 that estimates the accuracy of predicted atomic positions [Jumper et al., 2021]. High pLDDT scores (>90) suggest close agreement with experimental structures, while low scores (<50) often correspond to intrinsically disordered or flexible regions. This residue-level granularity allows researchers to identify well-modeled domains and areas requiring caution.

Complementing pLDDT, the Predicted Aligned Error (PAE) matrix estimates the expected positional error between pairs of residues, effectively illustrating uncertainties in relative domain positioning or multi-chain complexes [Evans et al., 2022]. This metric is particularly useful for evaluating inter-domain or inter-subunit arrangements, which are inherently more challenging to predict.

Interpreting Confidence Scores

While confidence metrics provide quantitative guidance, their interpretation requires integration with biological and experimental knowledge. For example, low-confidence regions may reflect genuine conformational flexibility rather than modeling failure [Uversky, 2019]. Thus, assigning biological meaning to confidence scores involves recognizing that structural disorder is a functional feature in many proteins.

Moreover, high confidence in static atomic positions does not guarantee accurate modeling of alternative conformations, ligand-induced changes, or dynamic allostery, which remain challenging for current AI models [Evans et al., 2022]. Researchers should avoid overconfidence in models solely based on confidence scores without considering biochemical context.

Limitations and Potential Misuses

Despite their utility, confidence metrics have limitations. They are model-derived estimates and may not always correspond perfectly to experimental accuracy. For example, pLDDT does not capture systematic errors such as incorrect domain packing or unusual conformations absent from training data [Callaway, 2022].

Additionally, confidence scores can be misleading when applied to novel protein folds or protein complexes involving unknown interaction interfaces. Overreliance on confidence scores without corroborating data risks overlooking important structural nuances or errors.

It is therefore advisable to use confidence metrics as part of a holistic assessment strategy, combining AI outputs with orthogonal experimental data, biochemical assays, and molecular dynamics simulations when feasible.

Best Practices

To maximize the value of confidence metrics, researchers should:

Examine pLDDT and PAE maps jointly to identify both local and global uncertainties.

Interpret low-confidence regions in light of known protein disorder or flexible domains.

Use confidence data to prioritize experimental validation efforts, focusing on uncertain or functionally critical regions.

Avoid interpreting confidence scores as absolute correctness; instead, treat them as probabilistic guides.

Combine AI predictions with experimental techniques such as cross-linking mass spectrometry or cryo-EM to validate domain orientations and interfaces.

Confidence metrics are indispensable tools for critically evaluating AI-predicted protein structures. Their proper interpretation, grounded in biological insight and complemented by experimental data, enables structural biologists to discern model reliability and design informed validation strategies. As AI models and metrics continue to evolve, proficiency in their use will become increasingly vital for integrating computational predictions into the broader structural biology paradigm.

References

Callaway, E. (2022). ‘It will change everything’: DeepMind’s AI makes gigantic leap in solving protein structures. Nature. https://pubmed.ncbi.nlm.nih.gov/33257889/

Evans, R., et al. (2022). Protein complex prediction with AlphaFold-Multimer. bioRxiv. https://doi.org/10.1101/2021.10.04.463034

Jumper, J., et al. (2021). Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873), 583–589. https://doi.org/10.1038/s41586-021-03819-2

Uversky, V.N. (2019). Intrinsically disordered proteins and their “mysterious” (meta)physics. Frontiers in Physics, 7, 10. https://doi.org/10.3389/fphy.2019.00010